Why Your AI Agents Aren't Smart: The Memory Systems Revolution You're Missing

Your AI agents have world-class LLMs, meticulously engineered prompts, and are running on the finest AWS infrastructure money can buy. Yet, they still act surprisingly dumb. They forget crucial context from last week’s meeting, fail to connect a customer complaint to a product flaw your engineering team already fixed, and cannot orchestrate a simple handoff between sales and legal.

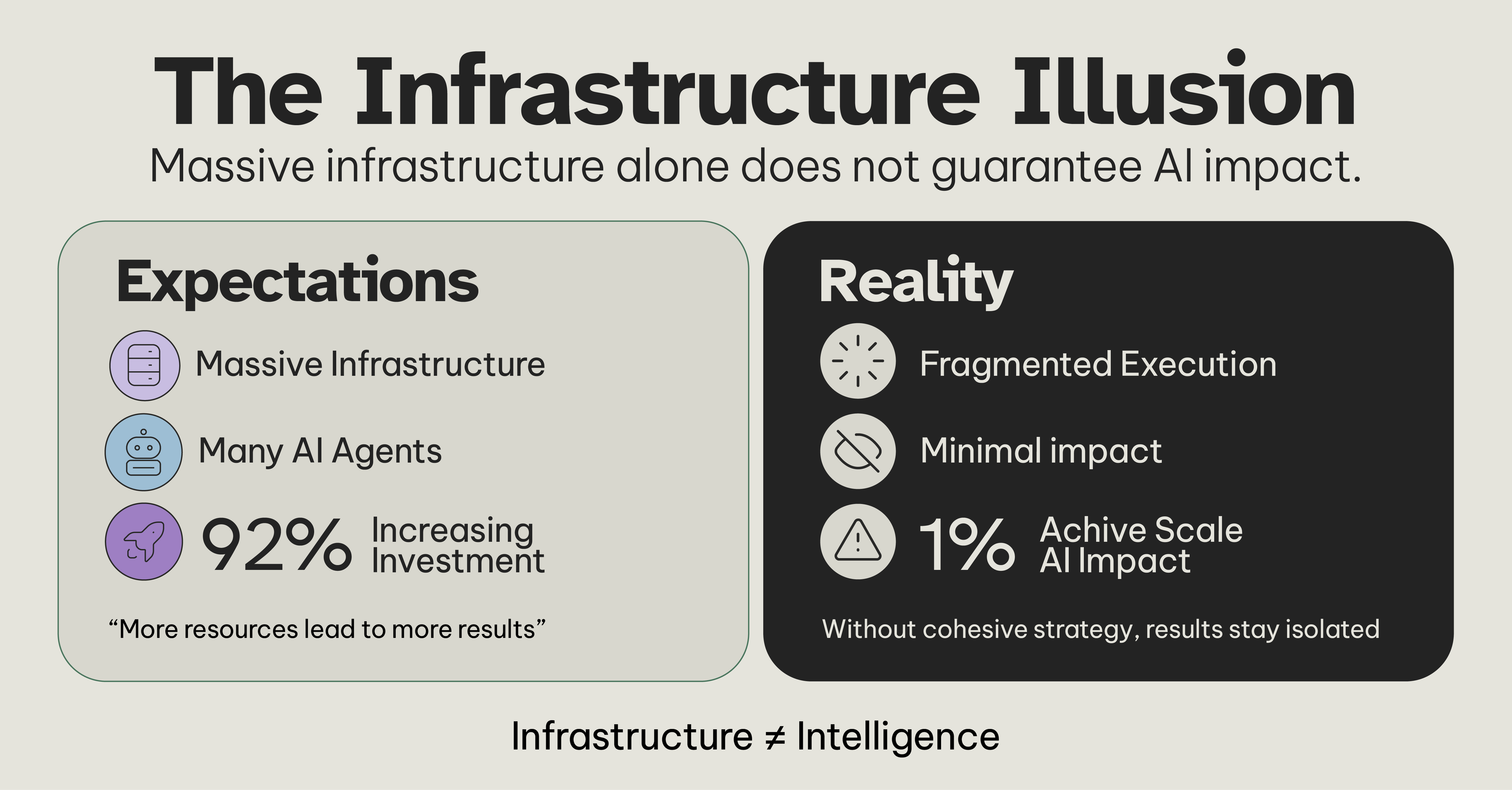

Why? Because embeddings aren't memory, RAG isn't learning, and a massive context window is not organizational intelligence. You’ve invested in the engine, but you’re missing the central nervous system.

This is the silent crisis of modern AI deployment. As discussions at AWS re:Invent 2025 focused on building and scaling agents, and as global leaders prepare for the World Economic Forum's 2026 challenge to “deploy innovation at scale and responsibly,” a critical gap remains unaddressed. The bridge between powerful infrastructure and transformative business outcomes is built on memory.

The Three-Stage Evolution of AI Memory: Why Most Enterprises Are Stuck in Stage 1

To understand why your agents are limited, you must see memory not as a feature, but as the foundational architecture for intelligence. Its evolution reveals why most corporate AI initiatives are hitting a wall.

Stage 1: The Illusion of Memory (Simple Embeddings)

For years, the state-of-the-art was vector similarity search. You chunk your documents, create embeddings, and hope the right one is retrieved. This isn't memory; it's a sophisticated lookup table. It has no understanding of relationships, no sense of time, and no ability to consolidate information. It’s why your agent can find a contract clause but cannot tell you how that clause impacted three different departments last quarter.

Stage 2: The Context Patch (RAG Systems)

Retrieval-Augmented Generation (RAG) was a necessary leap. By pulling relevant document chunks into the prompt, it grounds responses in your data. Yet, it remains a patchwork solution. As one analysis notes, without a structured memory layer, “AI assistants struggle with context retention over long interactions [and] cannot reason across systems.” RAG retrieves facts but doesn’t build understanding. It answers “what” but rarely “why” or “how.”

Stage 3: The Relationship Revolution (Graph-Based Memory Systems)

This is the frontier where intelligence truly emerges. Here, memory is structured as a dynamic knowledge graph - a network of entities (people, projects, products) and their relationships. This mirrors how your organization actually works.

The High Cost of Forgetting: How Dumb Agents Cripple Your Business

The limitations of Stages 1 and 2 translate into direct, measurable business pain, perfectly aligning with the frustrations voiced in recent enterprise community discussions:

- The Cross-Functional Coordination Trap: You deploy a brilliant sales agent and a efficient support agent. They cannot collaborate on a renewals process because they lack shared memory of the customer’s journey. This siloing is why 42% of executives say AI adoption is creating internal conflicts. You’ve automated silos instead of bridging them.

- The Eternal "Day One" Problem: Every interaction is a reset. An agent can’t learn from an expert’s correction, so the same mistake is made repeatedly. This stalls velocity and erodes trust, contributing to the stark pilot-to-production gap where 65% of enterprises have agentic AI pilots, but only 11% achieve full deployment.

- The Strategic Blind Spot: Without relational memory, your AI cannot perform multi-hop reasoning. It cannot trace a supply chain delay back to a specific regulatory change mentioned in an email thread two months prior. Your agents react; they cannot proactively connect dots. This leaves the $4.4 trillion productivity potential identified by McKinsey firmly locked away.

This is the core disconnect. The WEF asks leaders to deploy innovation “responsibly.” Responsibility in AI isn’t just an ethics checkbox; it’s functional reliability. An agent that forgets critical context or misconstrues relationships is inherently irresponsible because its outputs are untrustworthy.

Building Organizational Intelligence: The Memory-First Framework

Closing this gap requires a shift from deploying AI tools to cultivating Organizational Intelligence (OI). At Salfati Group, we see OI as the systems layer that turns infrastructure into reliable, cross-functional capability. Its core is a memory architecture that enables your organization to Sense, Understand, Decide, Act, and Learn as a coherent whole.

Here is a practical framework to diagnose your memory maturity and guide your next steps:

The Executive Playbook: From Dumb Agents to Organizational Intelligence

- Audit Your "Memory Stack": Don't just ask what AI tools you have. Ask: Where and how do our systems retain and connect information? Map out your current use of embeddings, RAG, and any nascent knowledge graphs. Most leaders are shocked to find their multi-million dollar AI initiative rests on Stage 1 technology.

- Pilot a Relationship-Critical Process: Identify one cross-functional workflow where relationships are key (e.g., customer onboarding linking sales, success, and product). Instead of building three separate agents, pilot a single orchestrated workflow powered by a graph that models the relationships between the customer, their contract, support tickets, and feature usage.

- Measure Learning, Not Just Output: Shift a key metric. Beyond task completion speed, measure knowledge velocity: How quickly is an expert's correction propagated across the system? How often does an agent successfully reference a past decision?

- Demand "Stateful" Orchestration: When evaluating platforms, move beyond "agent builders." Ask: How does your platform maintain state and context across a multi-step, multi-agent workflow? The right platform acts as a central nervous system, not just a collection of individual muscles.

The Salfati Lens: Intelligence as an Integrated System

Our work is rooted in the principle that AI-native must be human-first. This means building systems that assume the burden of work - the searching, the synthesizing, the routing - to liberate human judgment. The linchpin of this philosophy is a memory architecture designed for the organization, not just the application.

The enterprise AI conversation is maturing. It is moving from "what can an agent do" to "how do we build an organization that learns?" The winners in the next decade won't be those with the most AI pilots, but those with the fastest organizational learning loops. They will have moved beyond agents with amnesia to systems with consciousness of context, capable of the strategic foresight and reliable execution that only true intelligence provides.

Elevate your OI understanding and strategy with our new whitepaper “Beyond Infrastructure: The Organizational Intelligence Blueprint for Turning AI Capabilities into Unfair Advantages”.